First we wanted to use ceph-bluestore-tool bluefs-bdev-new-wal. However, it turned out that it is not possible to ensure that the second DB is actually used.

For this reason, we decided to migrate the entire bluefs of the osd to an ssd/flash.

Verify the current osd bluestore setup

ceph-bluestore-tool show-label –dev device […]

Verify the current size of the osd bluestore DB

ceph-bluestore-tool bluefs-bdev-sizes –path <osd path>

ceph-bluestore-tool bluefs-bdev-migrate –path osd path –dev-target new-device –devs-source device1 [–devs-source device2]

Verify the size of the osd bluestore DB after the migration

ceph-bluestore-tool bluefs-bdev-sizes –path <osd path>

if the size does not correspond to the new target size execute the following command:

ceph-bluestore-tool bluefs-bdev-expand –path osd path

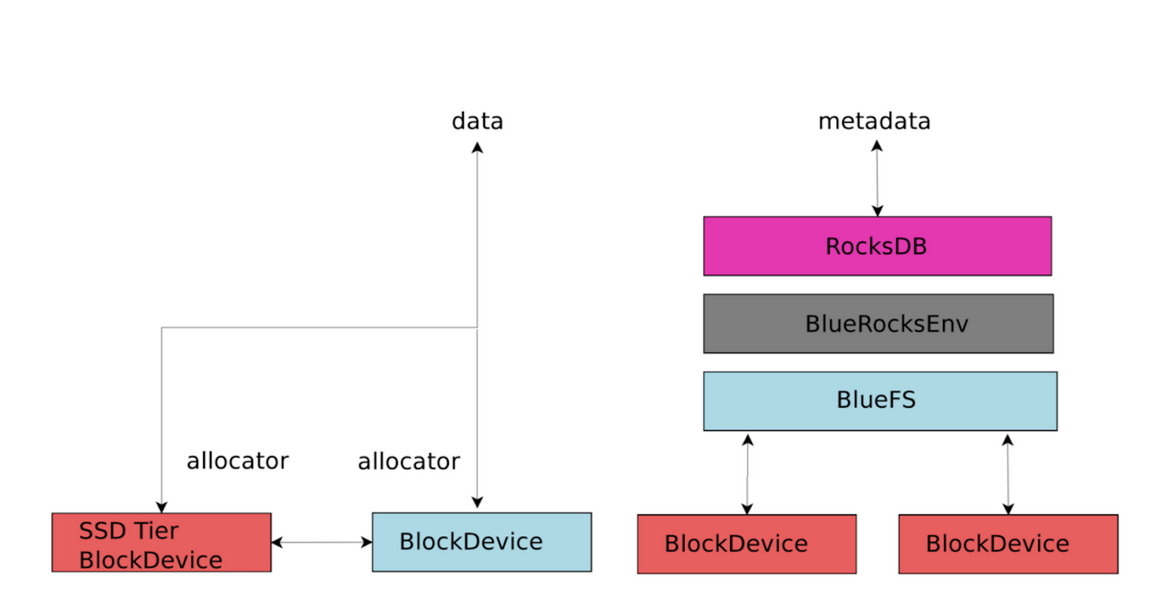

Instruct BlueFS to check the size of its block devices and, if they have expanded, make use of the additional space. Please note >that only the new files created by BlueFS will be allocated on the preferred block device if it has enough free space, and the >existing files that have spilled over to the slow device will be gradually removed when RocksDB performs compaction. In other >words, if there is any data spilled over to the slow device, it will be moved to the fast device over time. https://docs.ceph.com/en/octopus/man/8/ceph-bluestore-tool/#commands

Verify the new osd bluestore setup

ceph-bluestore-tool show-label –dev device […]

Update

You might be interested in a migration method on a higher layer with ceph-volume lvm.

docs.clyso.com/blog/ceph-volume-ceph-osd-migrate-db-to-larger-ssd-flash-device/

Appendix

I'm trying to figure out the appropriate process for adding a separate SSD block.db to an existing OSD. From what I gather the two steps are: 1. Use ceph-bluestore-tool bluefs-bdev-new-db to add the new db device 2. Migrate the data ceph-bluestore-tool bluefs-bdev-migrate I followed this and got both executed fine without any error. Yet when the OSD got started up, it keeps on using the integrated block.db instead of the new db. The block.db link to the new db device was deleted. Again, no error, just not using the new db

www.spinics.net/lists/ceph-users/msg62357.html

Sources

docs.ceph.com/en/octopus/man/8/ceph-bluestore-tool

tracker.ceph.com/attachments/download/4478/bluestore.png

www.suse.com/support/kb/doc/?id=000020276