Opensourcing Chorus project

Today, we're excited to share that we've released the Chorus project under the Apache 2.0 License. In this blog post, let's talk about what Chorus is and why we made it.

At Clyso, we frequently assist our customers in migrating infrastructure, whether to or from the cloud, or between different cloud providers. Our focus often centers around storage, particularly S3.

Like many others in the field, we initially relied on the fantastic Rclone tool, which excelled at the task. However, as we encountered challenges while attempting to migrate 100TB bucket with 100M objects, we recognized the need for an additional layer of automation. Migrating large buckets within a reasonable timeframe requires a machine with substantial RAM and network bandwidth to take advantage of the parallelism options provided by Rclone.

Yet, even with powerful machines, the risk of network problems or VM restarts interrupting the synchronization process remained. While Rclone handles restarts admirably by comparing object size, ETag, and modification time, the process becomes time-consuming and incurs additional costs for cloud-based S3, especially with very large buckets.

The missing piece in our puzzle was the ability to run Rclone on multiple machines for improved hardware utilization and the ability to track and store progress on remote persistent storage. With these goals in mind, we developed Chorus - a vendor-agnostic S3 backup, replication, and routing software. Written in Go, Chorus uses Rclone for S3 object copying, Redis for progress tracking, and Asynq work queue for load distribution across multiple machines.

Setting up Chorus with multiple workers consists of two steps. Begin with Redis, then run Chorus-worker services on worker VMs, providing them with Redis connection credentials and source/destination S3 connection credentials. Chorus workers expose a gRPC API for starting, stopping, pausing, and checking the status of bucket migrations, all accessible through the CLI.

Worker config example:

concurrency: 10 # max number of task that can be processed in parallel

redis:

address: "127.0.0.1:6379"

password: <redis password>

storage:

createRouting: true # create roting rules to route proxy requests to main storage

createReplication: false # create replication rules to replicate data from main to other storages

storages:

one: # yaml key with some handy storage name

address: s3.clyso.com

credentials:

user1:

accessKeyID: <user1 v4 accessKey credential>

secretAccessKey: <user1 v4 secretKey credential>

user2:

accessKeyID: <user2 v4 accessKey credential>

secretAccessKey: <user2 v4 secretKey credential>

provider: <Ceph|Minio|AWS|Other see providers list in rclone config> # https://rclone.org/s3/#configuration

isMain: true # <true|false> only one storage should be main

healthCheckInterval: 10s

httpTimeout: 1m

isSecure: true #set false for http address

rateLimit:

enable: true

rpm: 60

two: # yaml key with some handy storage name

address: others3.clyso.com

credentials:

user1:

accessKeyID: <user1 v4 accessKey credential>

secretAccessKey: <user1 v4 secretKey credential>

user2:

accessKeyID: <user2 v4 accessKey credential>

secretAccessKey: <user2 v4 secretKey credential>

provider: <Ceph|Minio|AWS|Other see providers list in rclone config> # https://rclone.org/s3/#configuration

isMain: false # <true|false> one of the storages should be main

healthCheckInterval: 10s

httpTimeout: 1m

isSecure: true #set false for http address

rateLimit:

enable: true

rpm: 60

For more details, see configuration docs.

Once Redis and configured Chorus workers are running, you can use the CLI to connect to the worker's gRPC API

and initiate migration for a specific bucket or all available buckets. For each bucket, the worker emits a

list-objects task, listing objects in the source bucket and emitting a sync-object task for each listed object.

Workers then sync objects in parallel using Rclone and additional logic, such as copying bucket/object tags and ACLs.

Chorus CLI commands examples:

-

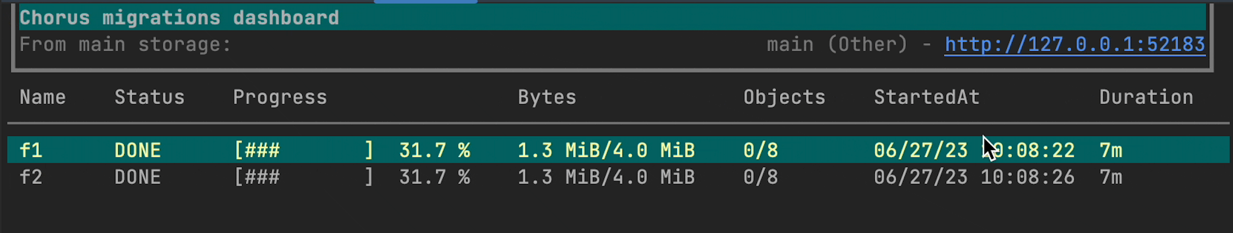

show live dashboard with bucket replication statuses:

chorctl dash

-

start replication for buket

chorctl repl add -b <bucket name> -u <s3 user name from chorus config> -f <souce s3 storage from chorus config> -t <destination s3 storage from chorus config>

The described flow requires stopping write operations to the source bucket before completing migration,

which may not be ideal for some cases. To address this, we implemented two interchangeable components: Chorus-proxy and

Chorus-agent. Both of these components capture live data changes in the source bucket during migration and emit corresponding sync-object tasks for workers.

The proxy should be used instead of source s3 to capture events and the agent is using s3 bucket notifications to

achieve the same goal.

This setup minimizes S3 downtime during migration. We are also exploring a zero-downtime setup where Chorus proxy is switching routing to the destination bucket after completing migration. Additionally, we are working on a web UI alternative to the CLI.

We run Chorus on Kubernetes, and a Helm chart is available (see: install from clyso registry). Chorus exposes Prometheus metrics and produces logs in JSON format. For monitoring, we use the Loki and Grafana stack.

Read more on project Docs or GitHub.